Joining Us? RSVP HERE

Diagnolingo

Kushagra Agarwal Diagnolingo seamlessly captures doctor-patient conversations in vernacular regional languages and creates AI-powered EHR entries for outpatient clinic operations in India. |

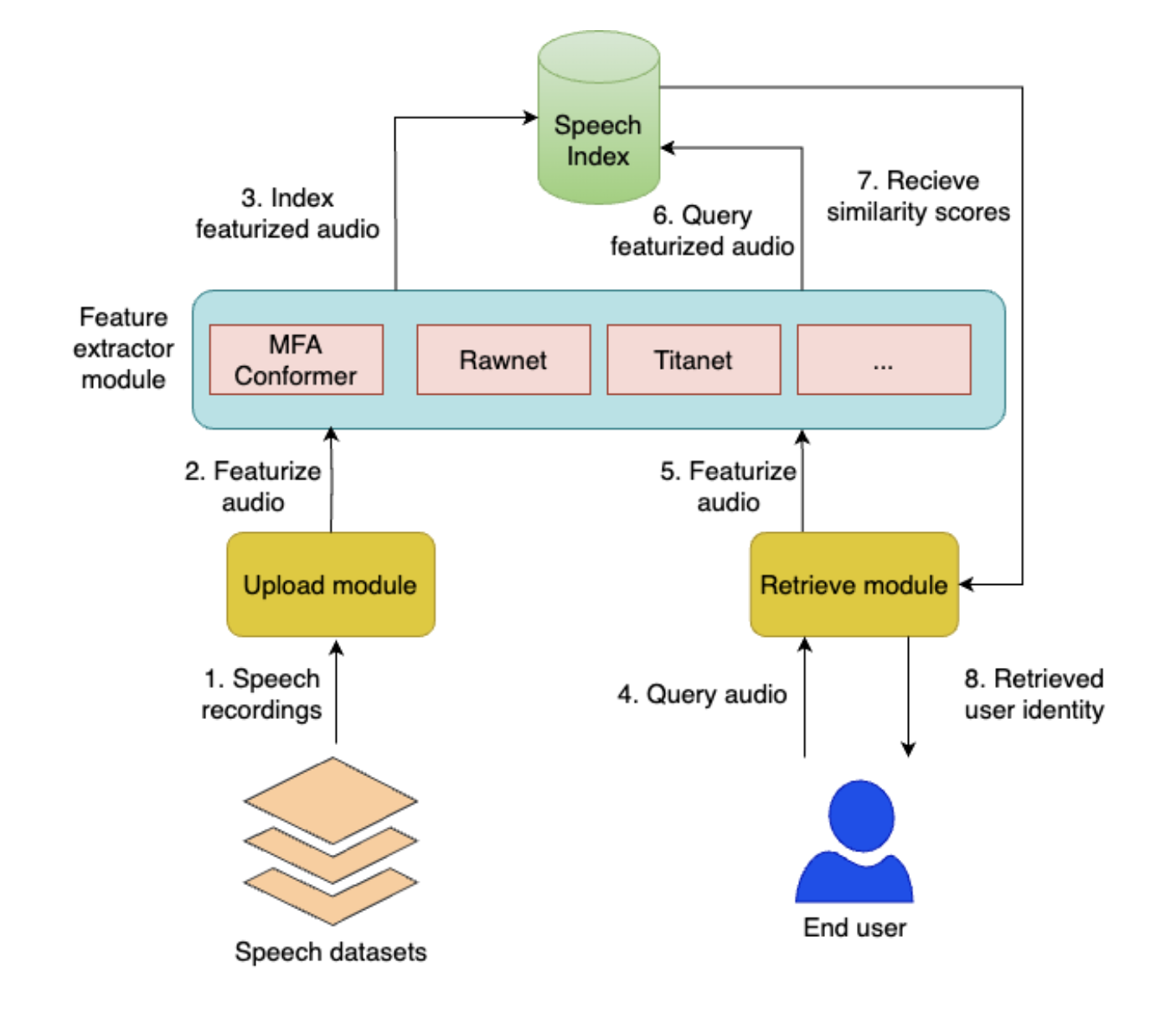

Unified Framework for Speaker Verification

Aryan Singhal, Moukhik Mishra Developing a robust framework for voice match using features combined from SOTA models. |

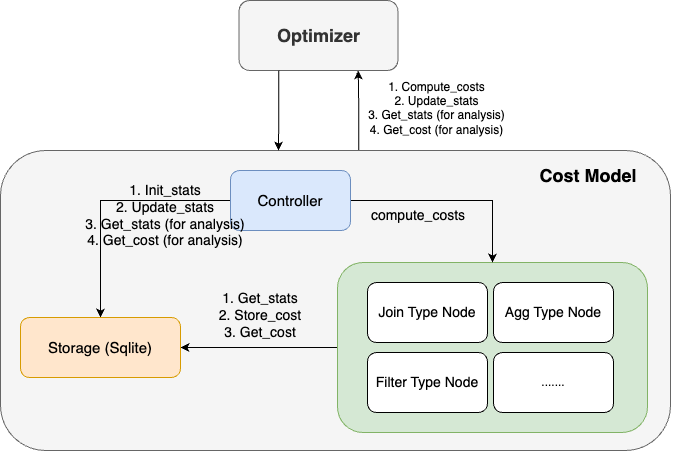

Cost Model as a Service

Yuanxin Cao, Lan Lou, Kunle Li The project is to develop a separate cost model service integrated with an OLAP optimizer with persistent states, designed to handle optimizer’s requests efficiently. |

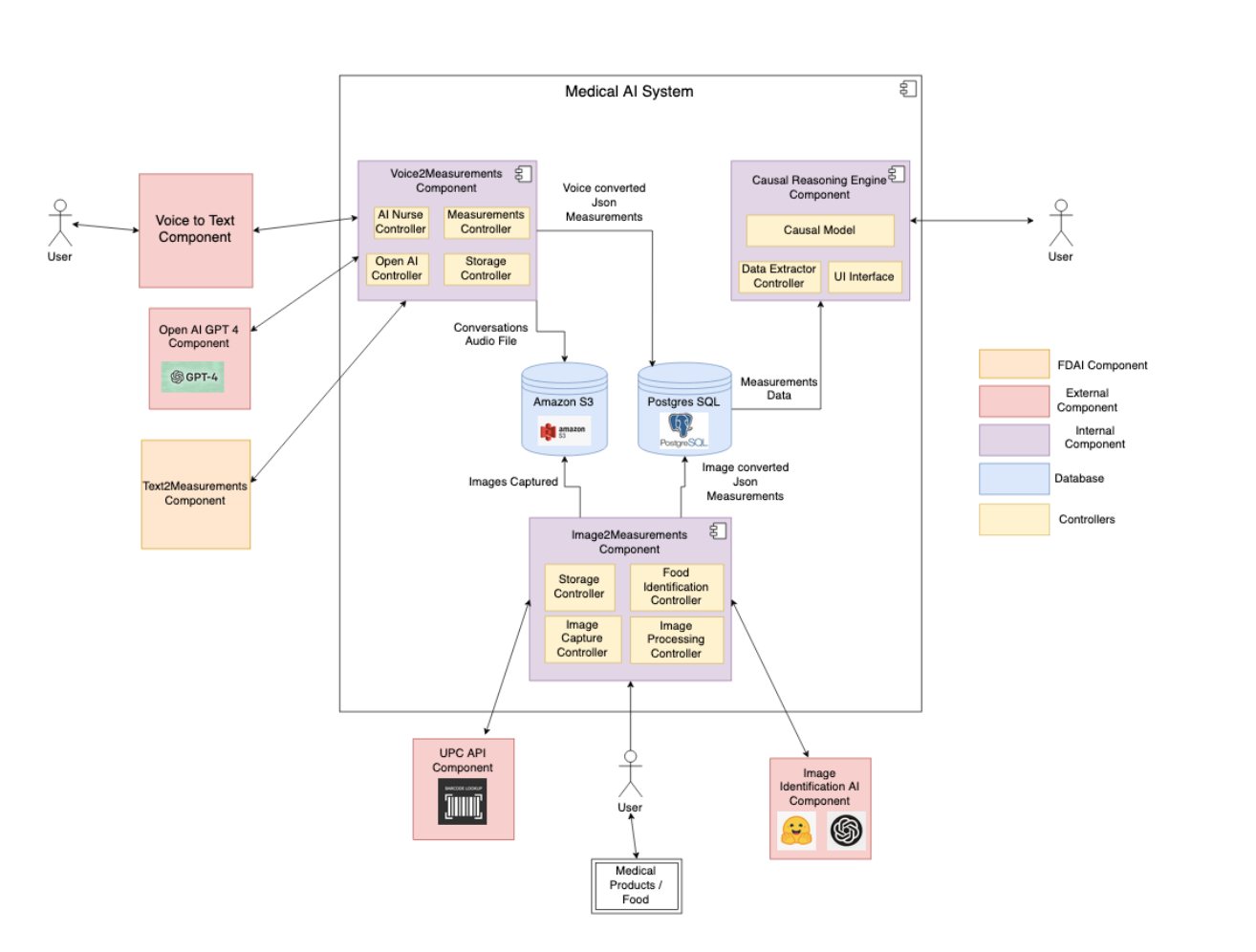

AI For Healthcare

Archana Prakash, Lakshay Arora, Yitian Xu A set of tools and framework to create autonomous agents to help regulatory agencies quantify the effects of millions of factors like foods, drugs, and supplements affect human health and happiness. |

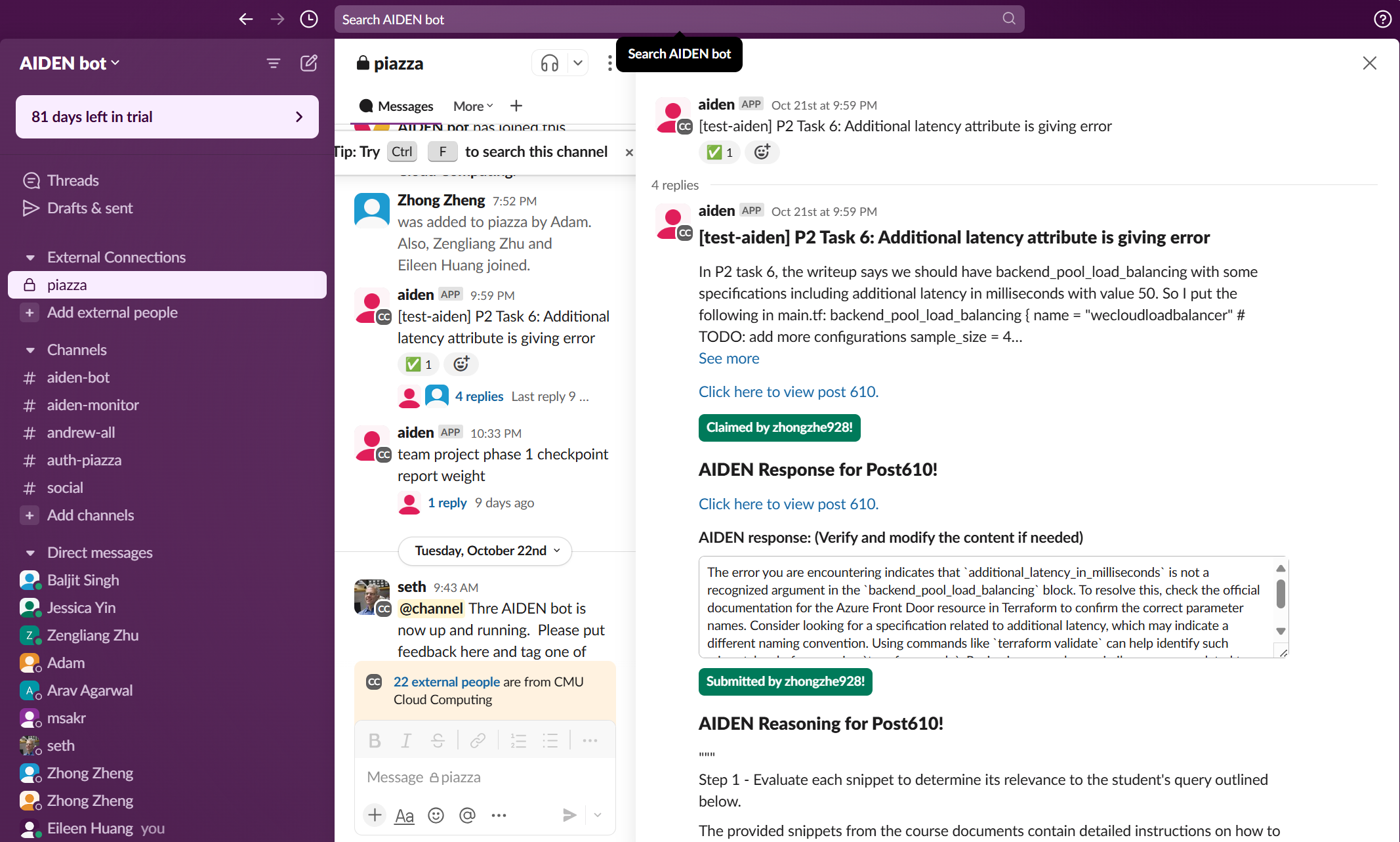

AIDEN

Zhong Zheng, Zengliang Zhu, Eileen Huang We developed AIDEN, an LLM-based agent that can help TAs answer student questions on Piazza. |

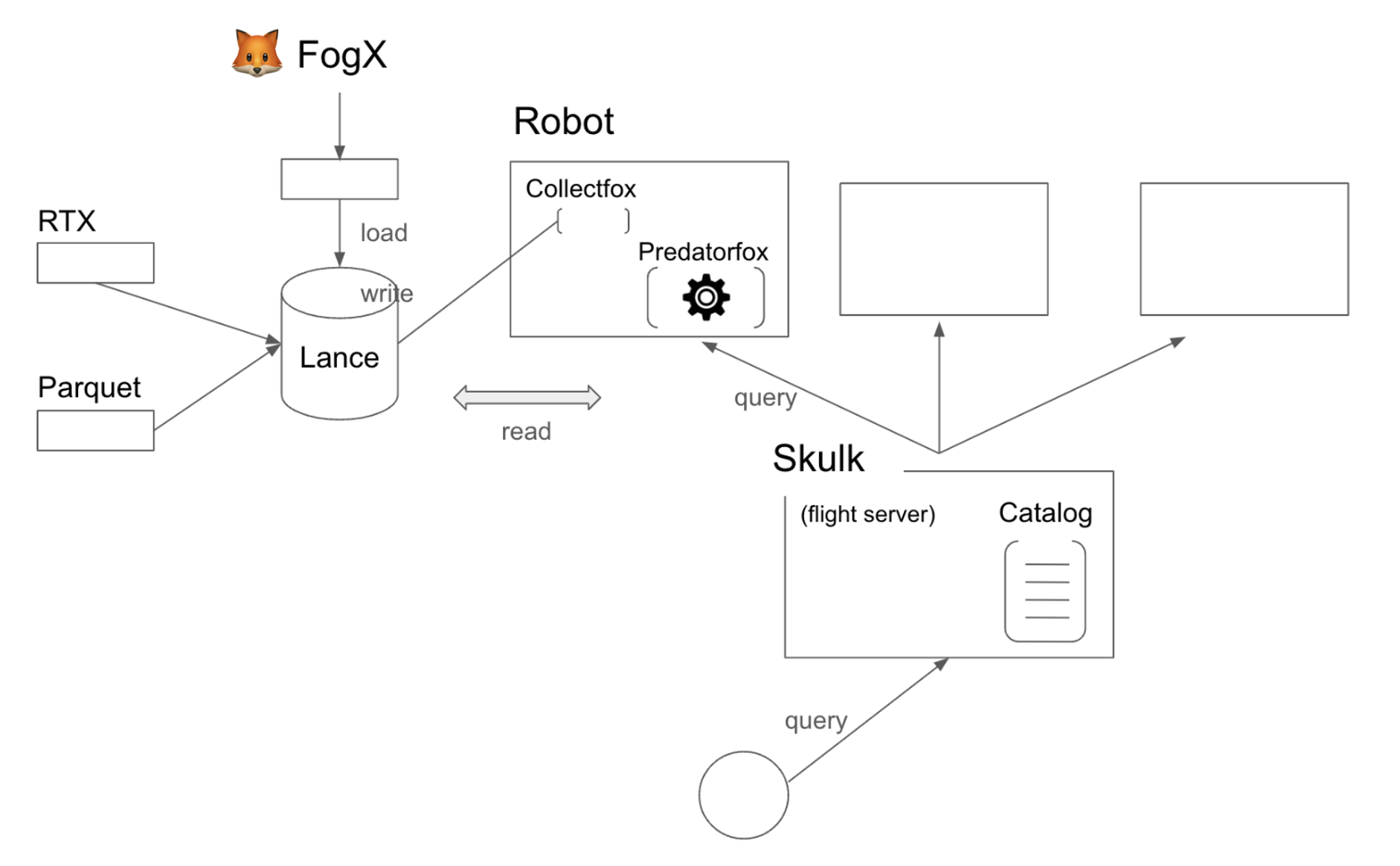

FogX-Store

J-How Huang, Haowei Chung, Xinru Li FogX-Store is a dataset store service that collects and serves large robotics datasets. Users of Fog-X, ML practitioners in robotics, can solely rely on FogX-Store to get datasets from different data source, and perform analytic workloads without the need to manage the data flow themselves. |

Competition-level Code Generation

Kath Choi Competition-level code generation remains a challenging tasks for today's large language models. In this project, we focused on developing an open-sourced code generation model that targets competition-level coding questions, through collecting a more exhaustive datasets and model fine-tuning. |

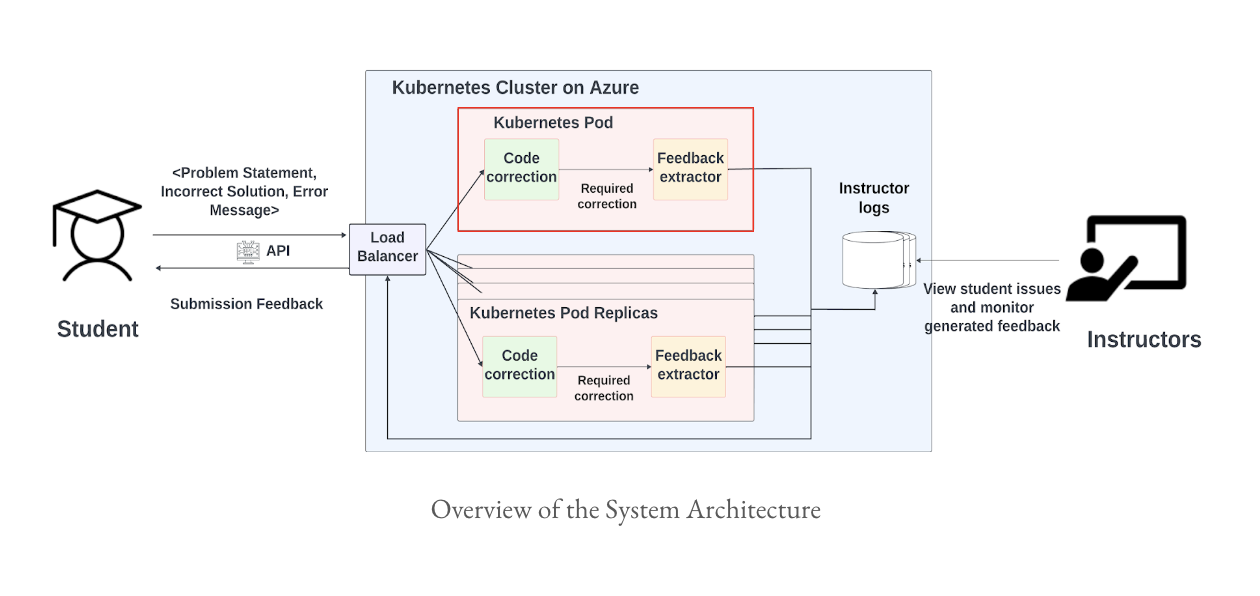

Autograding with LLM

Joong Ho (Joshua) Choi, Ankit Shibusam After extensive literature research, we have designed a LLM-driven and scalable solution that can quickly generate high-quality, personalised feedbacks for students in computer science classes. |

Automatic Question Generation for Education

Vanessa Colon, Po-Chun Chen, Narendran Saravanan We have leveraged the use of large language models to create a pipeline for educators to generate multiple choice questions from course materials and automatically assess the quality of their questions. |

FAIRMUNI

Brian Curtin, Aditi Patil, Jiayang Zhao, Mingxin Li FAIRMUNI - Utilizing the power of large language models to extract financial information from unstructured municipal bond documents |

AI Guide Dog 2024

Prachiti Garge, Yutong Luo, Tirth Jain We are developing a smartphone application designed to assist individuals with visual impairments in navigating outdoor environments, offering an alternative to guide dogs. In this phase, our focus is on model development and experimentation. |

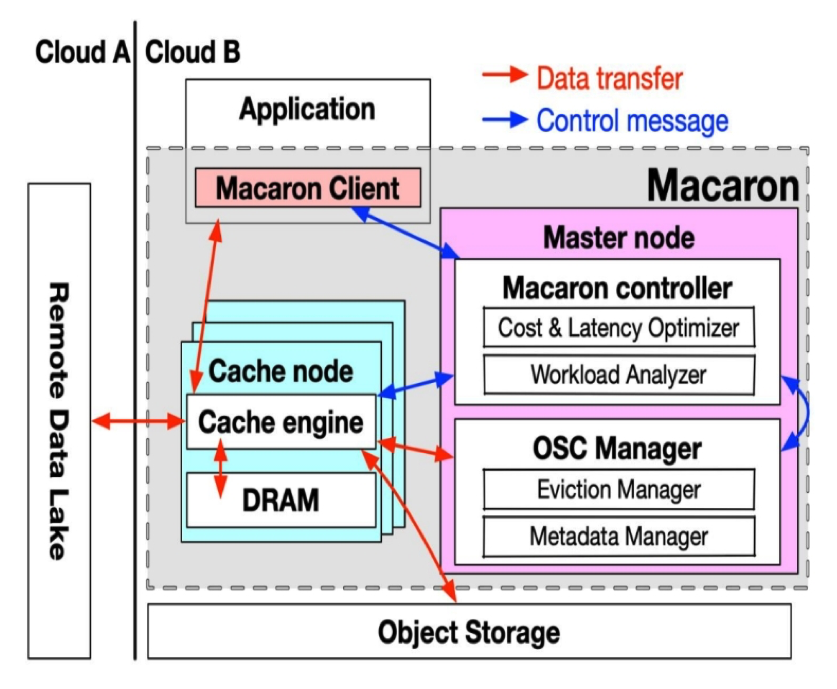

MACARON: Multi-cloud region Aware Cache Auto-configuRatiON

Fulun Ma Our project enhances the MACARON framework for multi-cloud computing by integrating a write-back cache policy and a dynamic decay factor to reduce data egress costs and improve scalability. This approach lowers operational costs and boosts application performance, especially for high-write workloads. Extensive testing shows significant advancements in cache management and cost-efficiency in multi-cloud environments. |

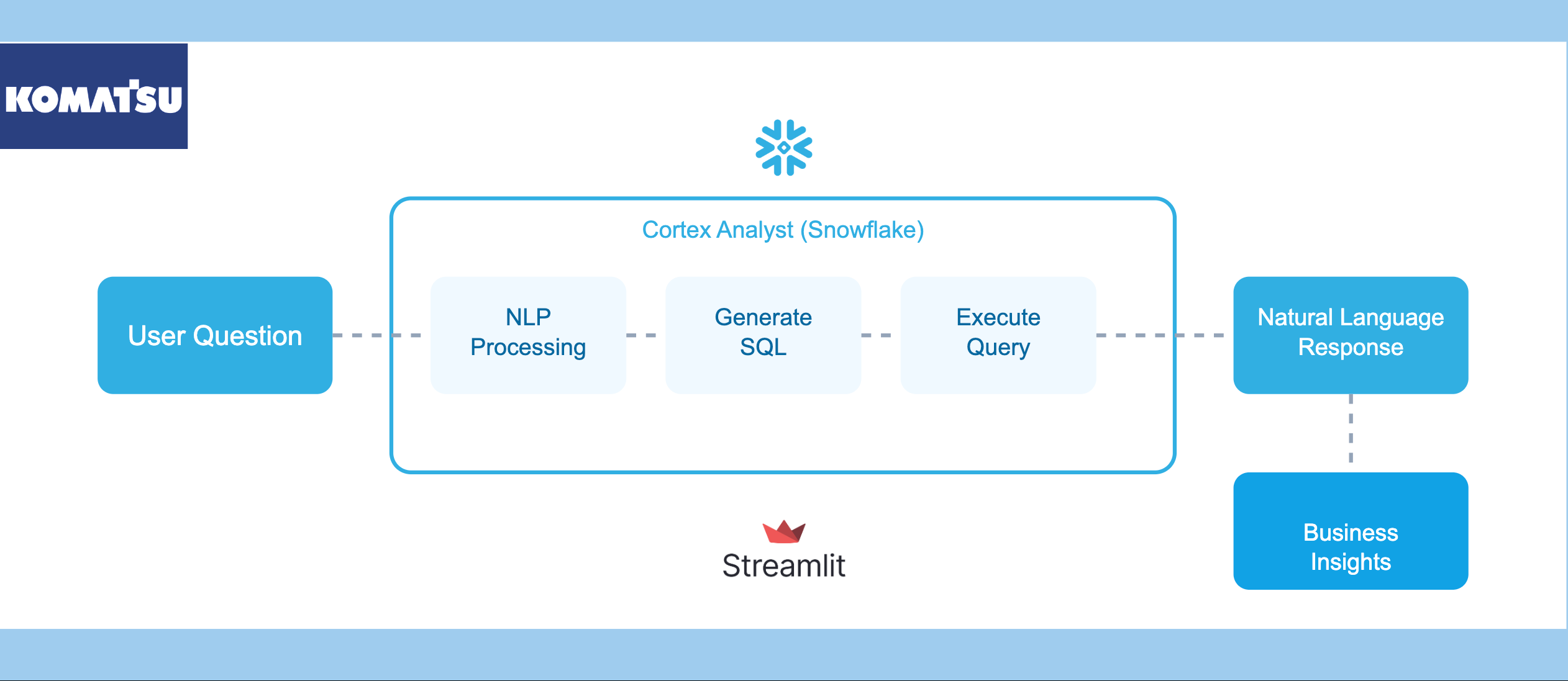

Data Science for Longwall Mining- Komatsu

Letian Ye, Haojun Liu, Priyanga Durairaj, Alan Wang In collaboration with Komatsu, the project aims to enhance business intelligence by developing an LLM-powered system that translates natural language questions into SQL queries. It focuses on building NLP-to-SQL models for accurate data retrieval and business insights using Snowflake and Streamlit. |

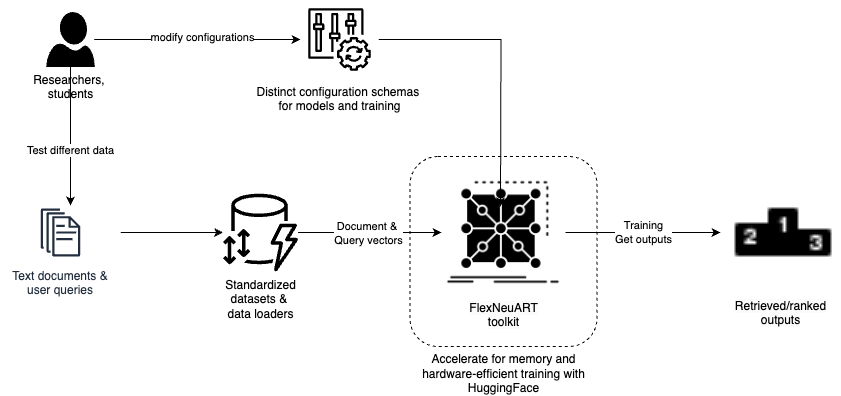

Neural IR

Yang Chen, Nipun Katyal, Gerardo Pastrana, Jyoshna Sarva The FlexNeuART toolkit, developed at CMU, supports both neural and non-neural retrieval, with capabilities for successful "leader boarding" and publishing. Our team intends to improve neural retriever and re-ranker training methods while preserving flexibility and performance. |

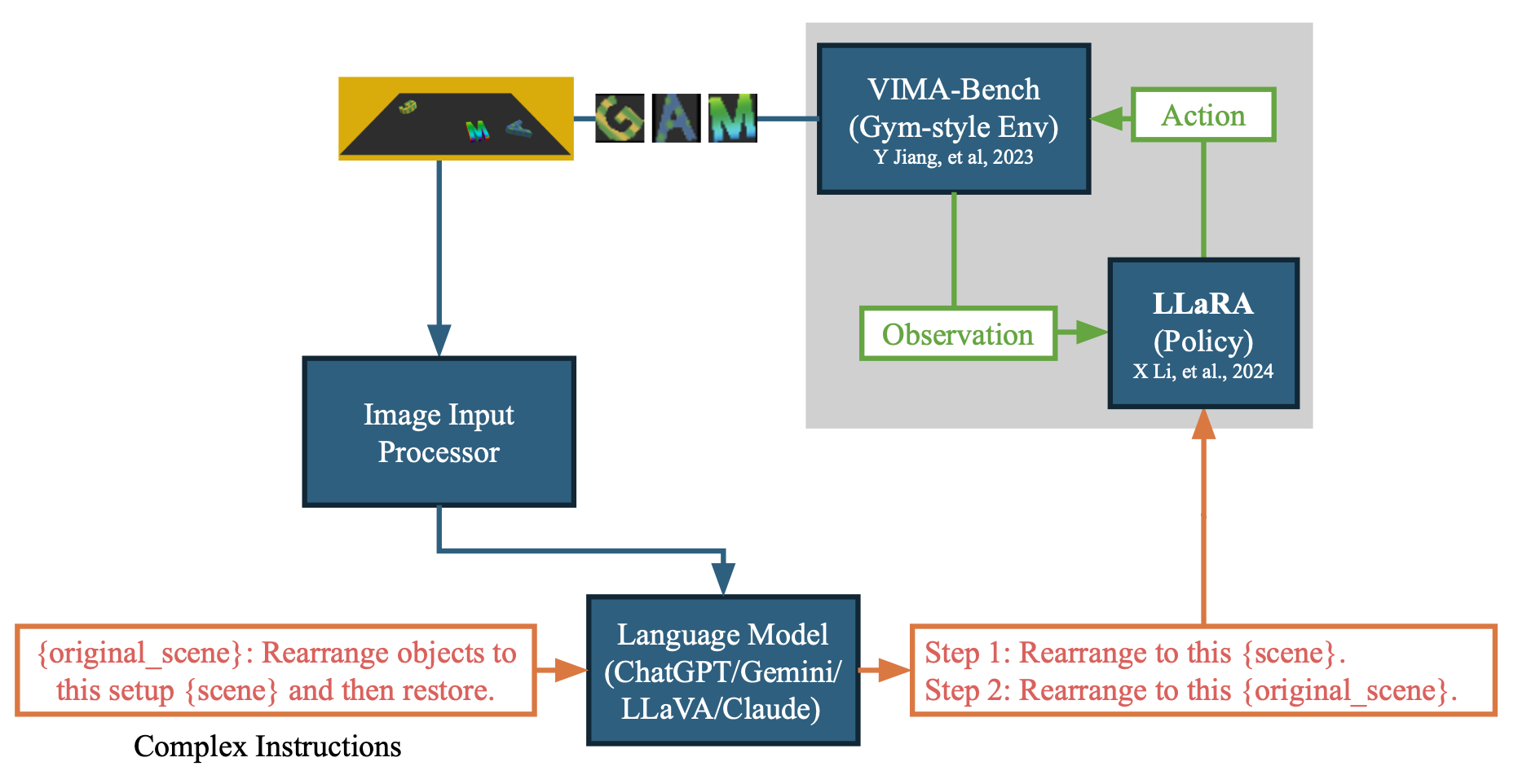

Semantic Reasoning for Robot Learning

Emily Guo, Shengnan Liang, Zian Pan, Chengyu Chung, Tsung-Yu Chen Our project focuses on enhancing the success rate of robotic arm task execution by transforming multimodal prompts into a format more effectively interpreted by robotic policies, leveraging Language Models for improved comprehension and accuracy. |

Analysis of Online Interpersonal Conflict

Wenjie Liu, Ruby Wu, Yuan Xu Our project investigates the causes and impacts of interpersonal conflicts on online platforms, examining escalation factors and mitigation strategies to foster productive and inclusive digital communities. |

Sensemaking Co-pilot

Jaydev Jangiti, Nivedhitha Dhanasekaran, Melissa Xu, Natasha Joseph Sensemaking Co-Pilot enhances learning soft skills by outlining different paths one could take to achieve a goal and creates a learning path using the user’s background and preferences. It reduces information overload, find quality resources, and quick-start learning for efficient skill development. |

Network Telemetry

Varun Maddipati, Parth Sangani Most of the current networking research focuses on the internet. Wireless networks are quite different from the internet as they have continuous changes. We use light weight tools to gather metrics and use them to update routing information to improve the network latency and bottleneck bandwidth. |

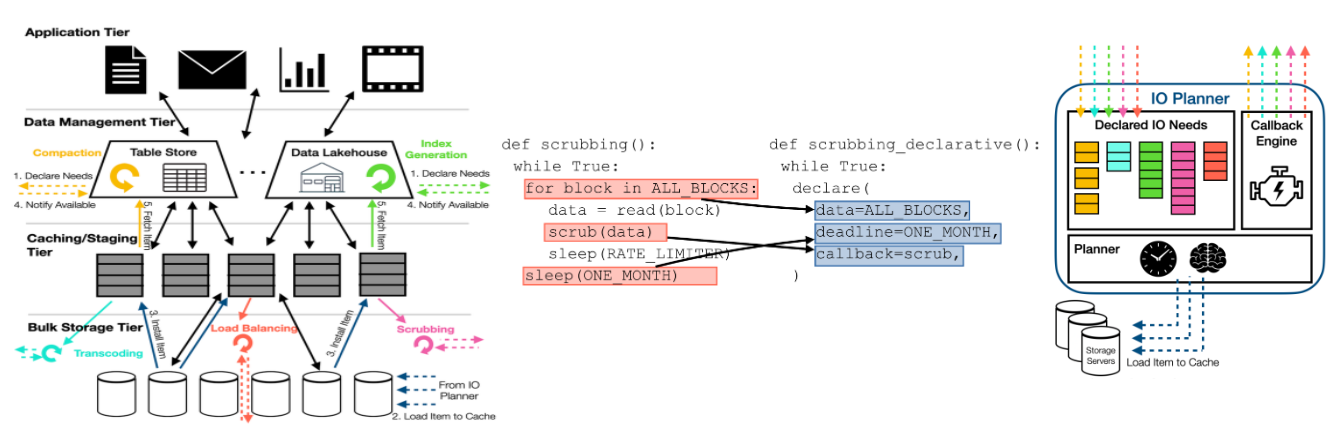

Scaling the Bandwidth-per-TB wall with Declarative Storage Interfaces

Sriya Ravi, Suhas Thalanki, Yu Liu Background tasks in datacenters play a significant role in increasing aggregate I/O bandwidth due to uncoordinated data block access. We implement an I/O Planner module in HDFS, that receives declarative I/O requests from background processes and co-ordinates access to reduce redundant I/O access. |

Optimizing Hybrid Cloud Partition of Data and Compute

Po-Yi Liu, Tengda Wang, Yu-Kai Wang Hybrid cloud deployment for large-scale data analytics requires strategic partitioning of data and tasks between on-premise and cloud sites to minimize networking costs. We aim to propose a framework that analyzes job logs to optimize data placement and replication. |

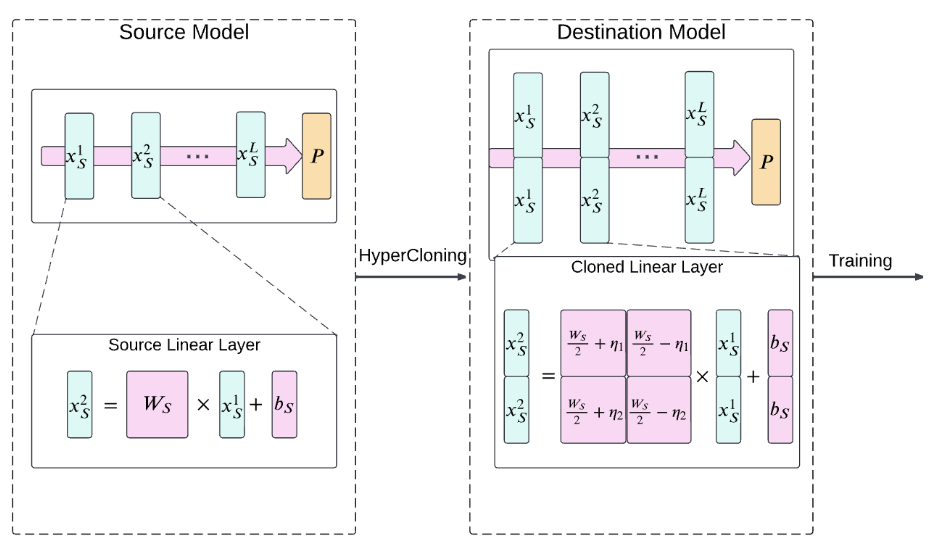

Efficient LLMs

Vibha Masti, Xinyue Liu Our project aims to improve large language model efficiency by using an expanded smaller model as initialization, rather than random initialization. By scaling up a fine-tuned small model to initialize a larger model, we can reduce the subsequent training time and costs. |

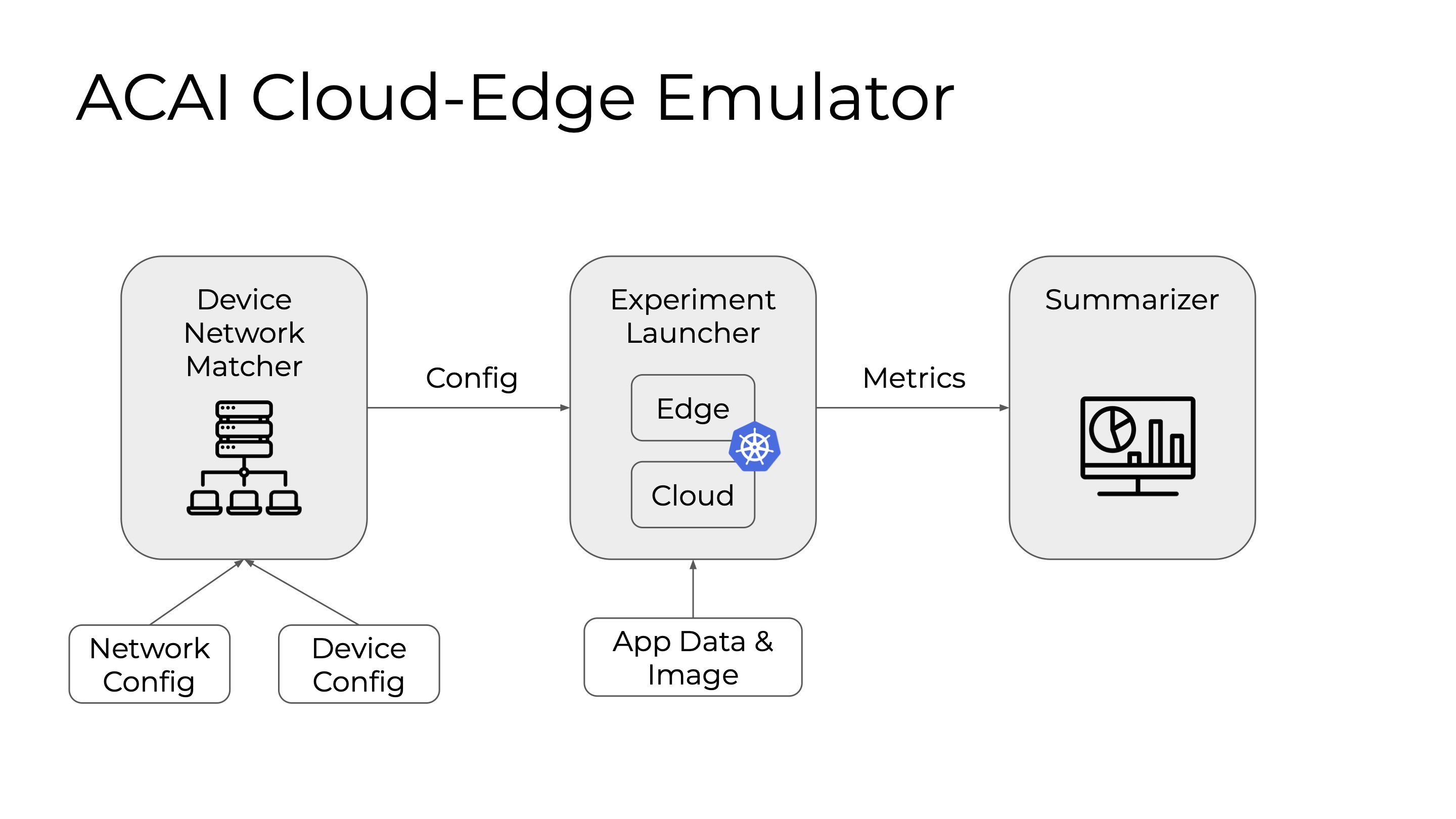

ACAI Cloud-Edge Emulator

Xiangyu Bao, Xuye He ACAI Cloud Edge Emulator leverages a Kubernetes-based system to simulate real-time behavior of Cloud Edge AI applications, emulating resource limitations and network conditions in real time to detect performance bottlenecks effectively. |

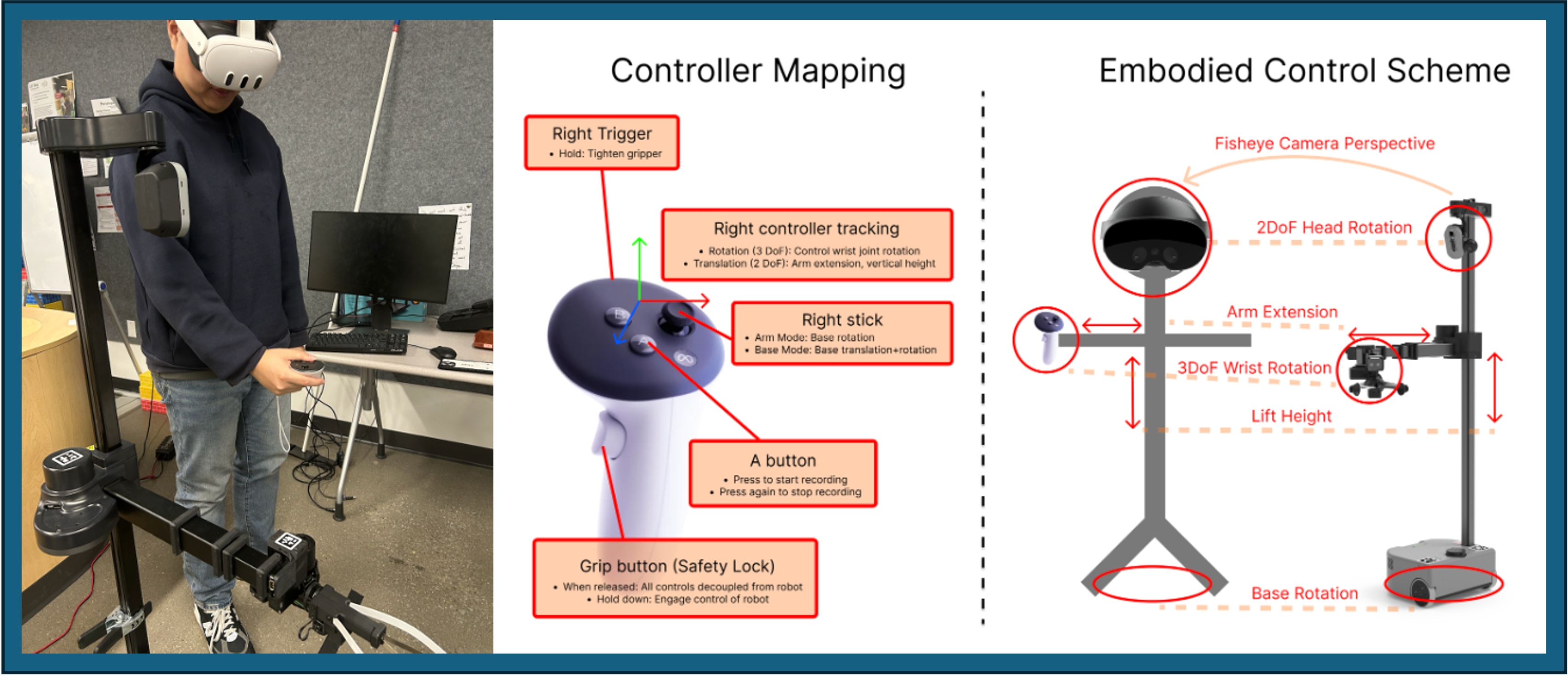

Leveraging AR to Train Robots

Yi-Che Huang, Yung-Wen Huang, Tianyi Cheng Training robots to conduct tasks is hard since it involves making instant decisions on complicated surrounding environments. In this project, we’re using the advantages of augmented reality to improve the data collection process, the quality of data, and the task learning for robot training. |

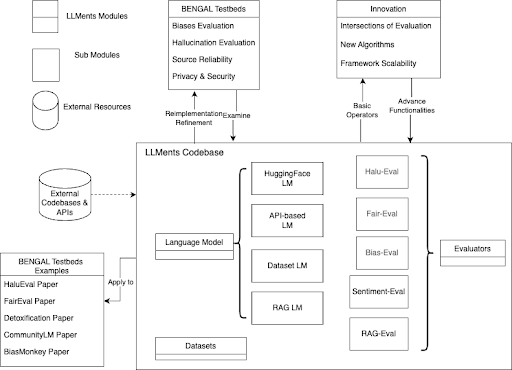

Pytorch for LLM Analysis

Aakriti Kinra, Xinran Wan, Qingyang Liu, Mihir Bansal, Rohan Modi Deep learning advances have powered large language models (LLMs) and AI innovations. However, LLM analysis often lacks reusability, relying on custom methods. Our project, LLMENTS, introduces a modular framework that simplifies LLM analysis, enabling reproducibility and new research inquiries. |

AI Curators

Yifan Wang, Jessica Yin, Jasmine Wan, Alan Zhang We democratize access to personalized art education through digitized museums & AI-driven features to make visual art appreciation easier to consume and more engaging. |

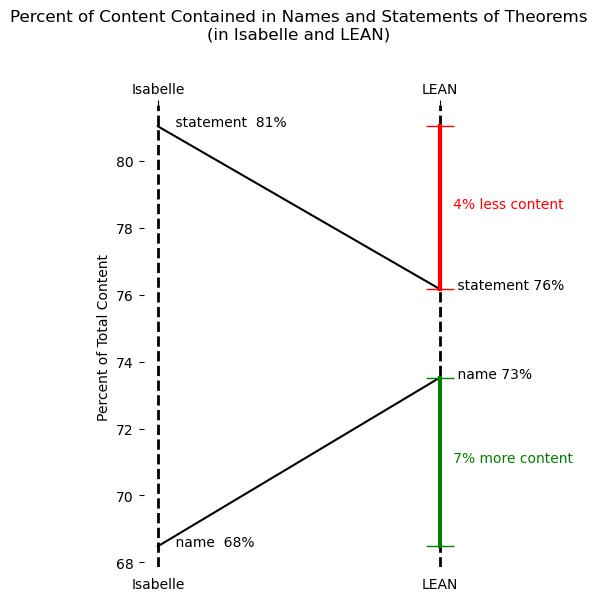

ML and Formal Mathematics: What's in a Name?

Wil Cocke Interactive theorem provers allow users to state and prove theorems in a formal way. We investigate how much information of a theorem is contained in the theorem's name and compare this across two ITPs: LEAN and Isabelle. |